WEX Inc. – Qualitative Evaluation & Human‑in‑the‑loop testing

-

Context

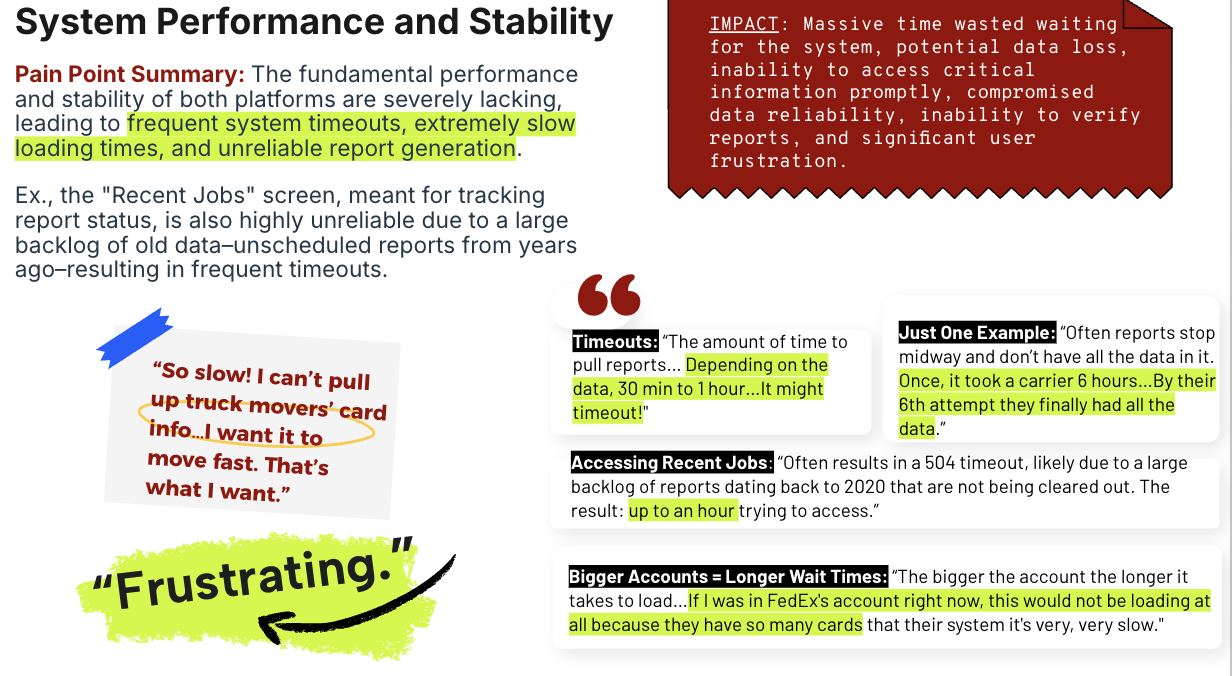

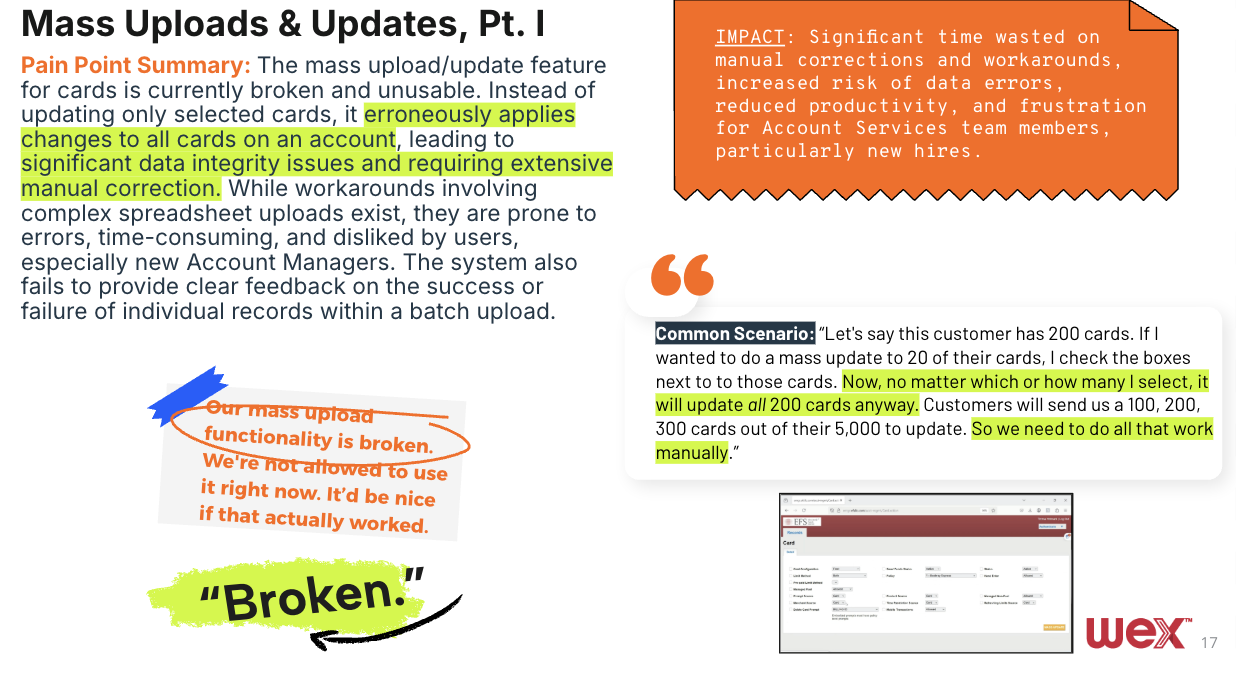

The eManager project sought to understand the usability and workflow challenges of an internal platform used daily by the Account Services team. Although eManager supported core operational tasks, significant friction in performance, information organization, and reporting reliability was affecting productivity, user confidence, and operational outcomes. This study aimed to uncover real‑world usability obstacles, reveal where automated metrics failed to surface meaningful failure modes, and inform design and technical improvements to reduce operational risk and streamline high‑stakes internal workflows.

Research Methodology

To uncover nuanced usability risks and workflow breakdowns within eManager, we conducted a remote, moderated qualitative evaluation using representative task scenarios and human‑in‑the‑loop observation.

Participants from the actual Account Services team were recruited to mirror the real user base, ensuring insights were grounded in domain‑specific experience. Each session involved task‑based interactions with the platform, where participants were encouraged to use a think‑aloud protocol to verbalize decision points, confusion, and expectations. Tasks were designed to expose friction related to performance (e.g., lag), information fragmentation, reporting reliability, and task completion.

The study captured both behavioral performance data (task success, time on task, error patterns) and attitudinal feedback (clarity, confidence, perceived usability). Following task completion, short open‑ended questions allowed participants to articulate pain points, missing elements, and preferences.

Analysis combined iterative thematic coding with severity rating to identify recurring usability risks and edge cases that automated logging had not disclosed. Findings were synthesized into actionable recommendations that influenced priority technical fixes, workflow redesigns, and new self‑service feature proposals, effectively reducing operational risk and providing stronger evidence for roadmap decisions.

-

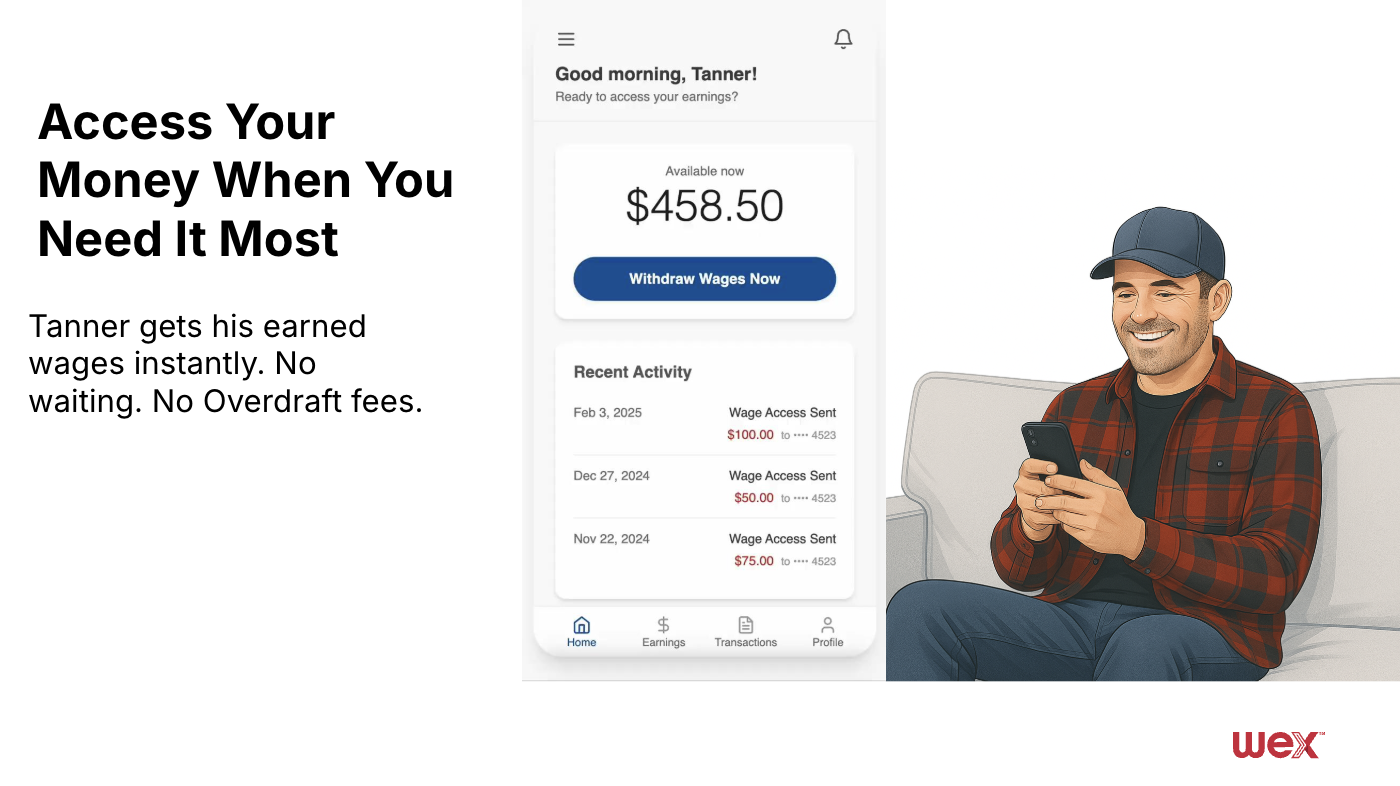

Context

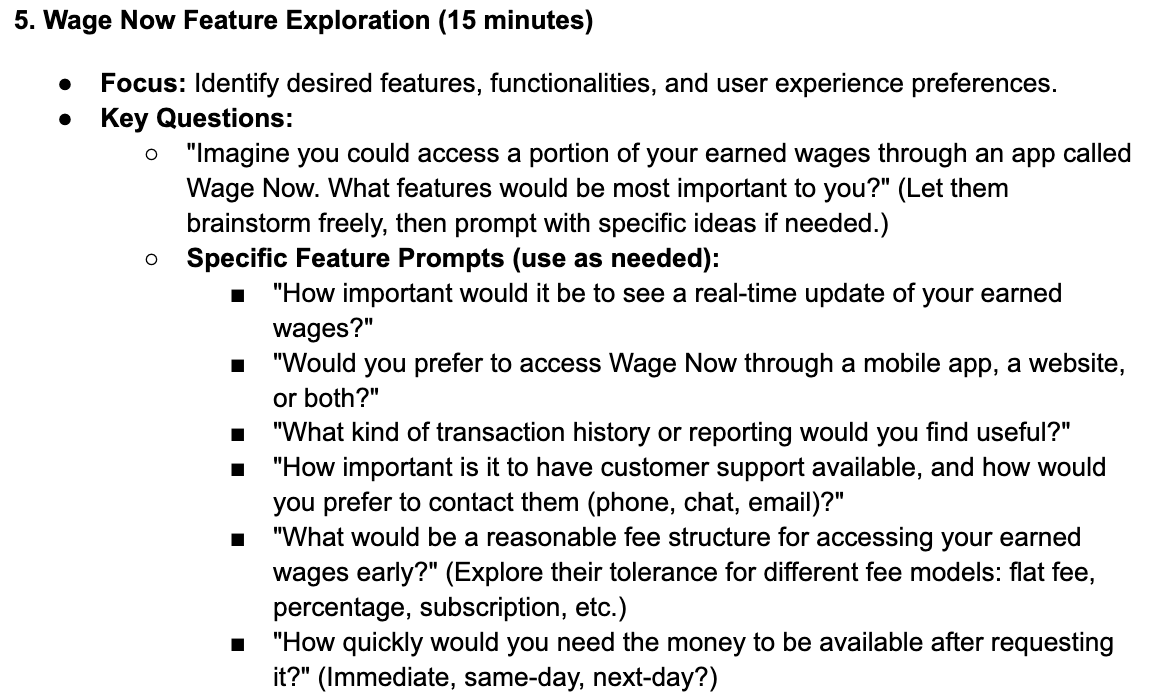

The Wage Now study evaluated the usability and comprehension challenges within a high‑stakes financial workflow allowing users early access to earned wages. This product intersects sensitive transactional data and critical decision points (e.g., payout options, fees, eligibility criteria), where errors or misunderstandings could negatively impact trust and customer outcomes. The goal was to identify friction in core tasks, understand how users interpret financial information, and support product refinements that reduce risk and improve clarity.

Research Methodology

To assess user comprehension and decision clarity in Wage Now, we conducted remote, moderated usability sessions with representative participants who matched typical end‑user profiles. Each participant completed a series of task‑based interactions that reflected essential workflows — such as initiating a request, understanding fee structures, and navigating eligibility messaging.

Using a think‑aloud protocol, participants were prompted to verbalize their reasoning, expectations, and confusions during task execution. Following each task set, brief structured questions elicited deeper qualitative feedback on perceived clarity, confidence in decisions, and emotional response to system messaging.

Quantitative usability metrics were captured, including:

Task completion success and error occurrence

Time on task

Misinterpretation frequency

Confidence ratings for financial comprehension

Qualitative data were analyzed through thematic coding, grouping observations by pain point patterns, trust barriers, and unclear terminology. Insights from this method revealed critical usability risks, informed messaging and UI refinements, and guided prioritization of clarity improvements that directly reduced user risk and operational friction.

Comparative evaluation scorecard used to prioritize feature investments and mitigate risk

-

Context

Telematics research focused on how users understand and interact with vehicle usage, tracking insights, and related analytics within an enterprise mobility context. Users relied on telematics data to make operational decisions, yet inconsistent terminology, unclear visualizations, and cognitive disconnects between data and action created usability challenges. The study aimed to map these issues, understand misalignment between user mental models and interface structures, and advise design adjustments that improve interpretability and workflow efficiency.

Research Methodology

The Telematics study used a mixed‑method usability approach combining task‑based remote sessions with qualitative probing to evaluate how users make sense of telematics data and complete real‑world tasks.

Participants were recruited to reflect target user personas with experience in fleet or vehicle data interpretation. During remote, moderated sessions, participants completed scenarios involving data interpretation, report navigation, and decision‑oriented tasks. Sessions were guided with a think‑aloud protocol, encouraging participants to articulate their reasoning as they interpreted charts, dashboards, and textual summaries.

Measures collected included:

Task success and pattern of misinterpretation

Time on task for key workflows

Cognitive load indicators (hesitation, repeated actions)

Self‑reported clarity and confidence

Following task completion, structured follow‑ups elicited narrative feedback on confusion points, terminology misunderstandings, and suggestions for improvement. Data were aggregated and analyzed with thematic coding to identify patterns of difficulty, inconsistent mental models, and areas where visual cues failed to support interpretability.

Findings were synthesized into prioritized design recommendations, including terminology adjustments, visualization refinements, and workflow simplifications. These recommendations were subsequently integrated into roadmap planning and UI redesign discussions to improve user outcomes and data comprehension.