Supporting Artifacts — Enterprise UX Research Program (SingleStore)

These materials illustrate how research was operationalized at scale across multiple enterprise products. Artifacts are shared to demonstrate process rigor, reuse, and decision enablement—not as exhaustive documentation.

-

Context

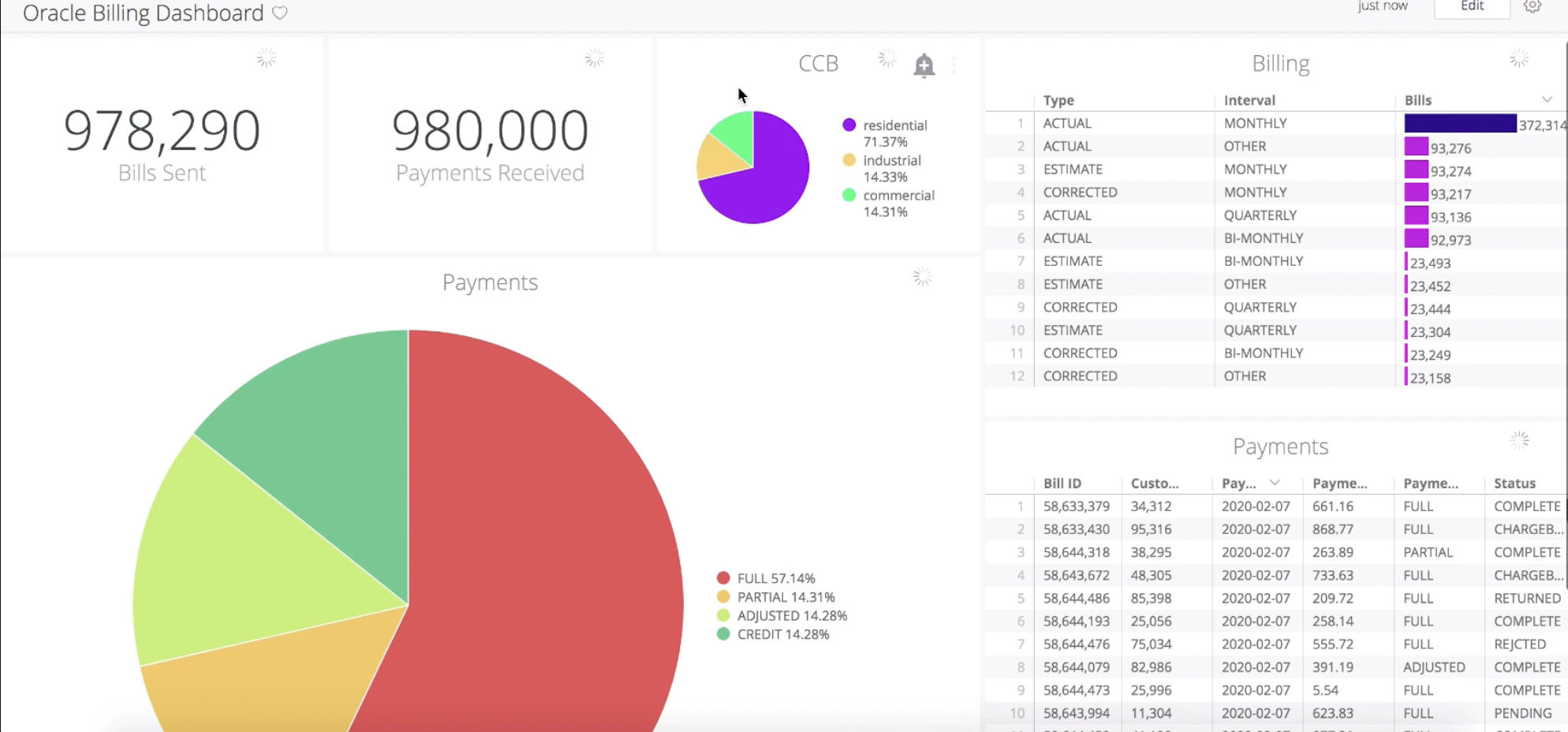

This project involved understanding how enterprise users interact with cross-product research dashboards and how metrics influence prioritization decisions.

Research Methodology

To evaluate the effectiveness and usability of the Dashboards environment, we executed a structured mixed-methods study designed to capture both behavioral performance data and rich qualitative feedback.

We conducted remote, moderated sessions where participants completed representative tasks using the dashboards. These tasks mirrored real decision workflows — such as prioritizing insights, interpreting metric relationships, and navigating between data summaries — allowing observation of users’ ability to locate, interpret, and act on critical information.

Throughout each session, participants were encouraged to use a think-aloud protocol, verbalizing their reasoning, strategies, confusion points, and decision criteria. Tasks were followed by short post-task questions and open-ended discussion to gather deeper perceptions on usability, clarity, and confidence in the insights presented.

Our study captured key quantitative usability metrics including:

Task completion success rate

Time on task

Accuracy in interpretation

Ease of navigation and discoverability

Qualitative data focused on pain points related to information grouping, dashboard terminology, and mental model alignment with user expectations. Results were analyzed thematically to identify patterns of confusion, areas of cognitive load, and opportunities for enhancing interpretive confidence.

Findings from this methodology informed prioritization of dashboard enhancements and guided design decisions that increased clarity, reduced interpretation risk, and supported more confident product decisions across enterprise workflows.

-

Context:

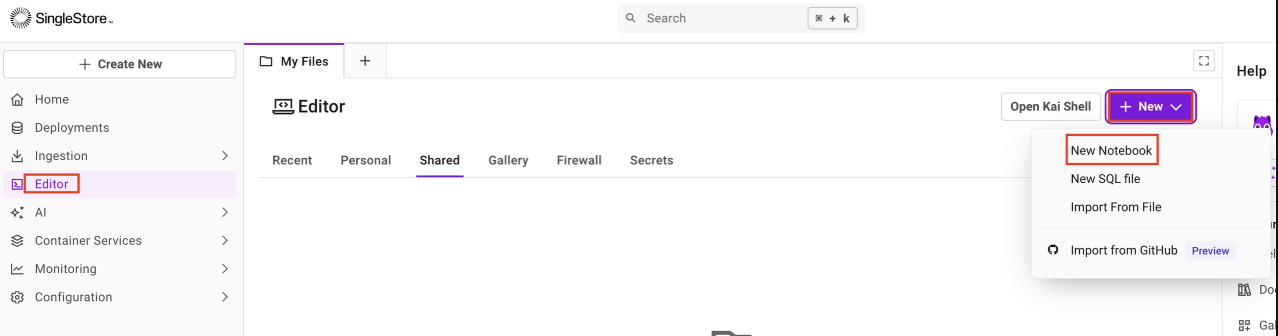

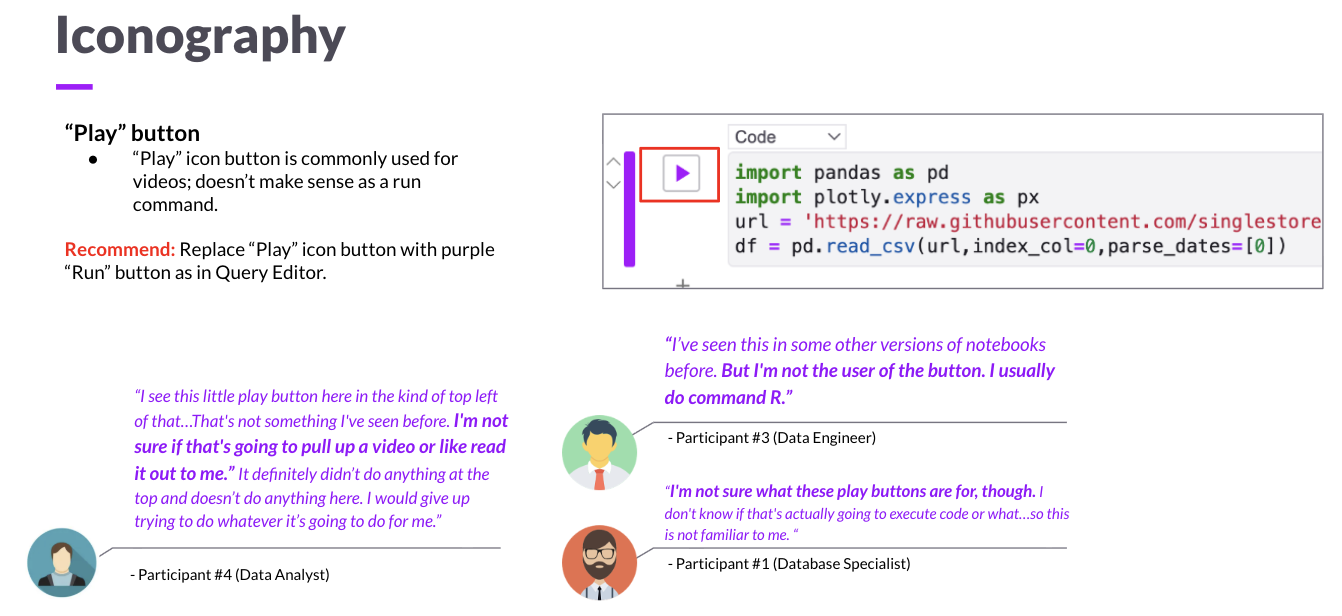

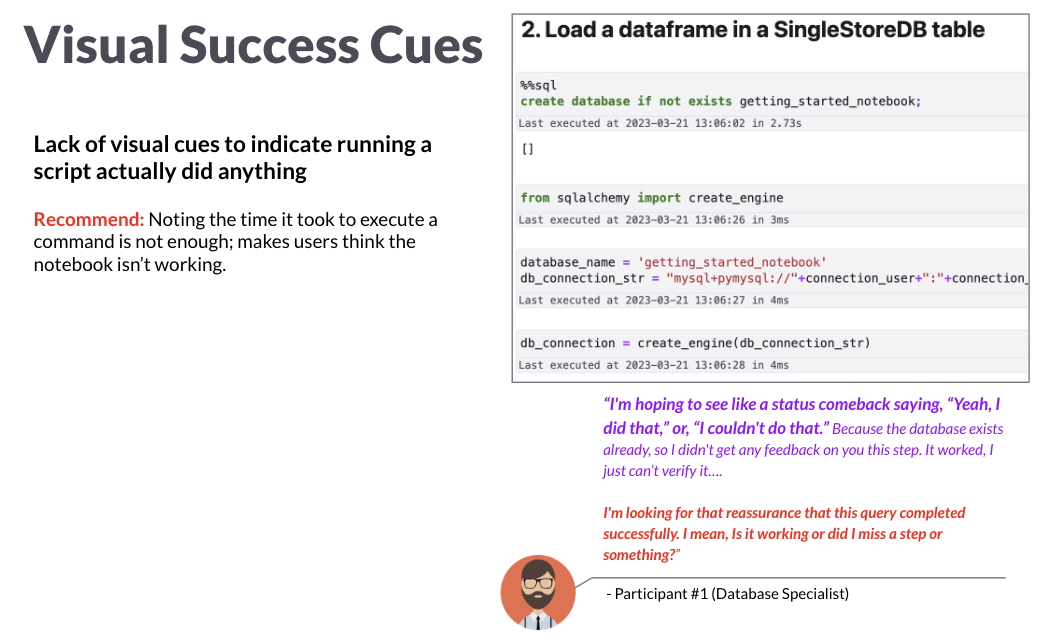

This study focused on understanding the usability of a new Notebooks feature within the app, which allows users to save, organize, and share scripts in a centralized location rather than on their personal machine or cloud. The goal was to uncover general usability feedback, identify pain points, observe missing elements, and capture how different user segments (e.g., App vs. BI users) approached setup, organization, and sharing workflows. Insights from this study directly informed feature refinements, UI adjustments, and prioritization of enhancements to improve overall user experience.

Research Methodology

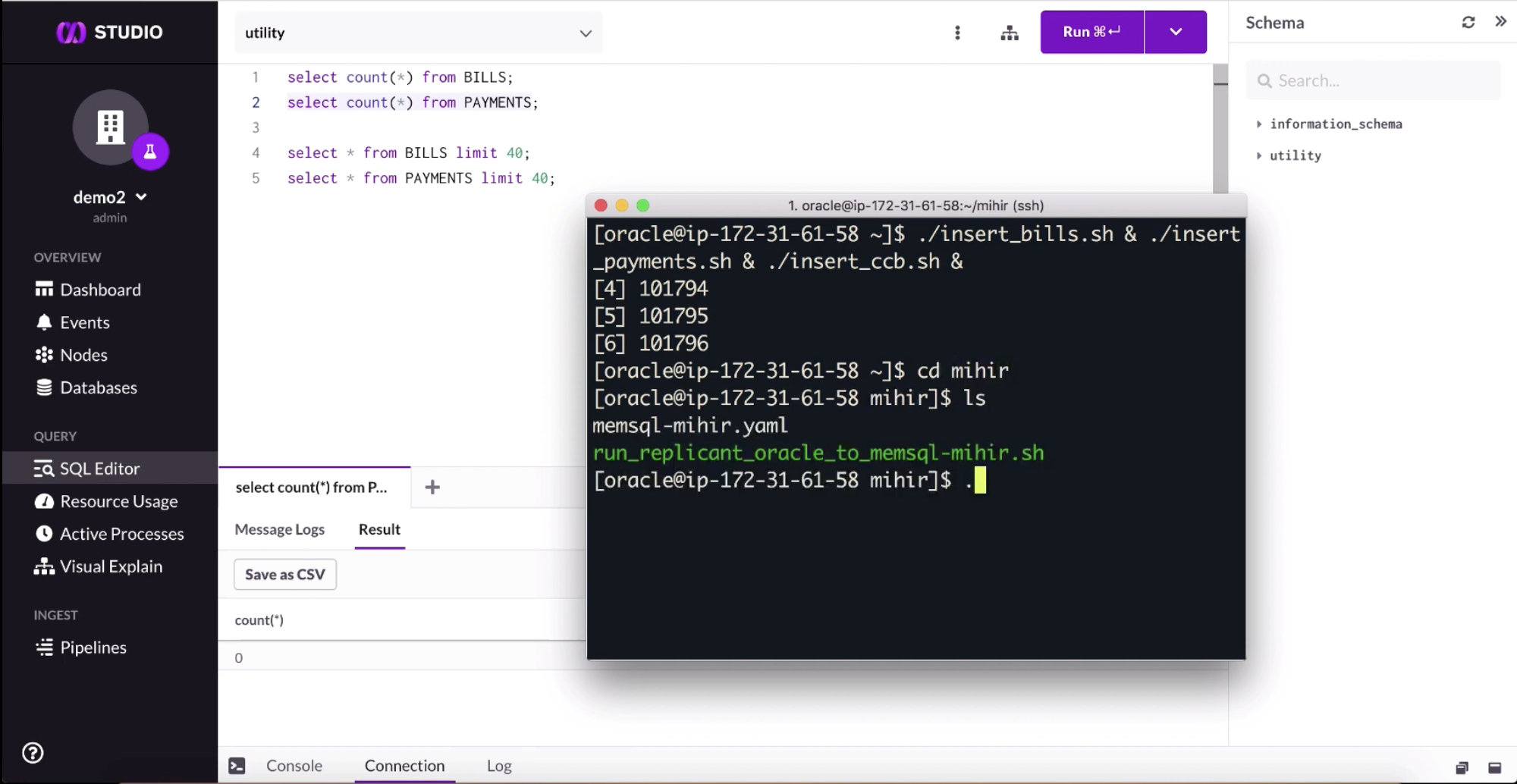

This study evaluated the usability and early reception of Notebooks, a new feature designed to let users save and share scripts directly within the application rather than managing them locally or in external cloud tools.

We conducted remote, moderated usability interviews using a think-aloud protocol, allowing participants to interact with a local instance of the product while verbalizing their decision-making, expectations, and points of friction in real time. Sessions focused on representative task scenarios related to notebook setup, usage, and exploration of the feature’s core workflows.

Participants completed task-based activities designed to surface both behavioral performance data and attitudinal feedback. Following tasks, we used short post-task questions and open-ended discussion to probe perceptions of usability, clarity, and overall confidence in the feature.

The study captured a combination of qualitative insights and quantitative usability metrics, including:

Task success and completion rate

Time on task

Ease of use and pain points

Readability and clarity of information

Perceived gaps, missing elements, and delighters

Special attention was given to differences across user segments (App vs. BI), work roles, and real-world use cases to understand how expectations and workflows influenced feature adoption. Findings were synthesized to identify usability risks, prioritize improvements, and inform product decisions ahead of broader preview releases.

-

Context

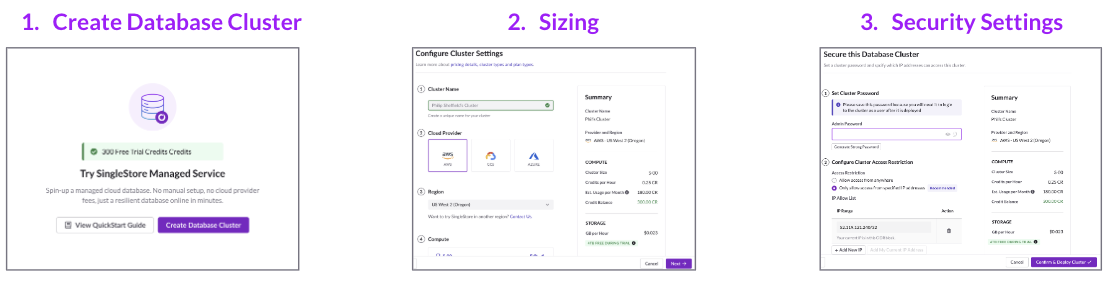

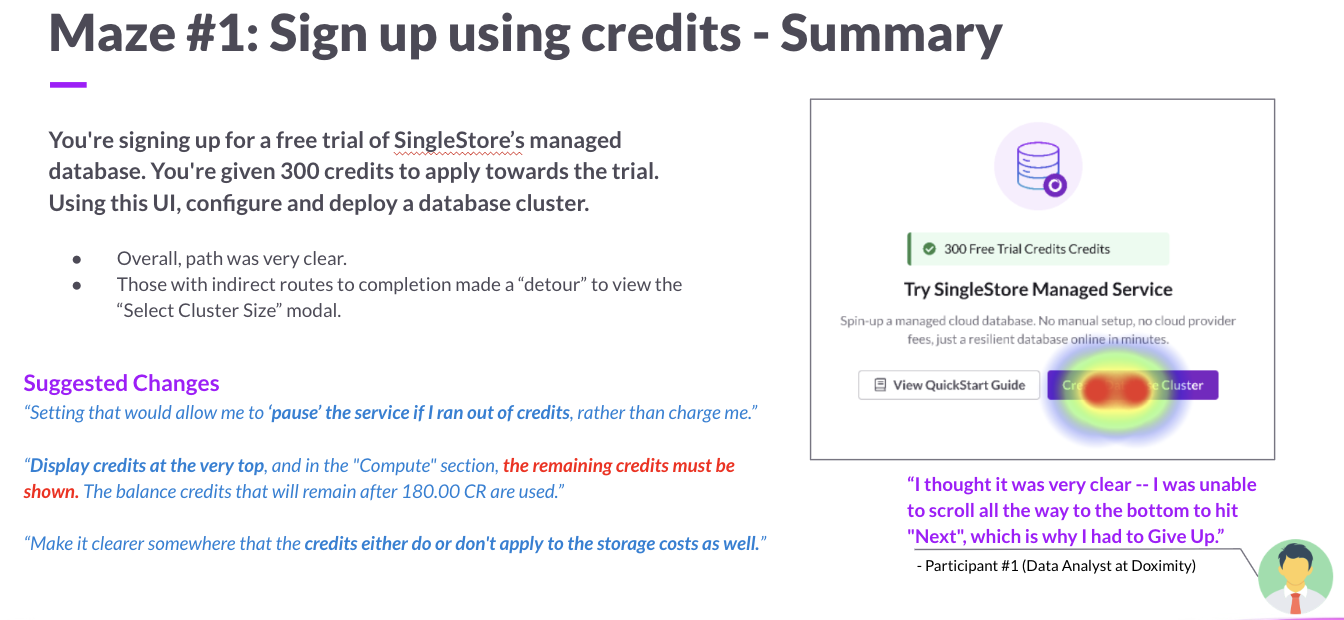

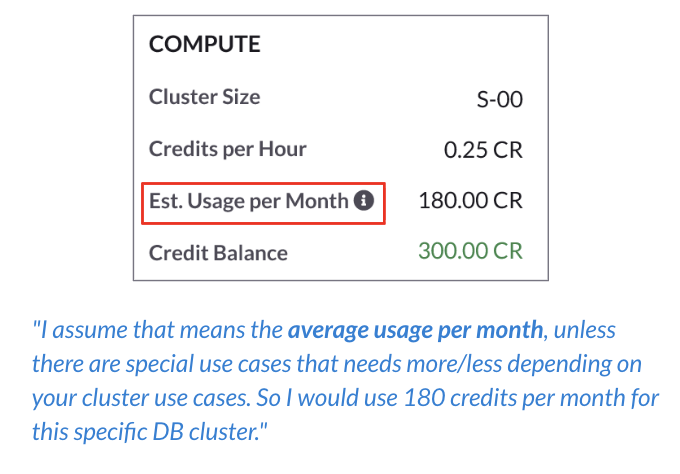

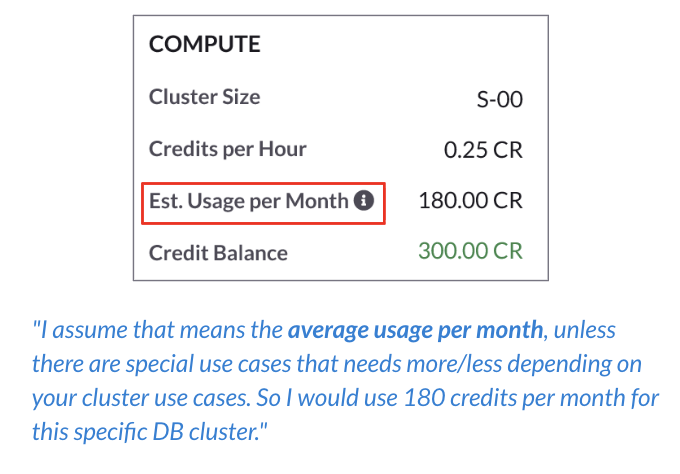

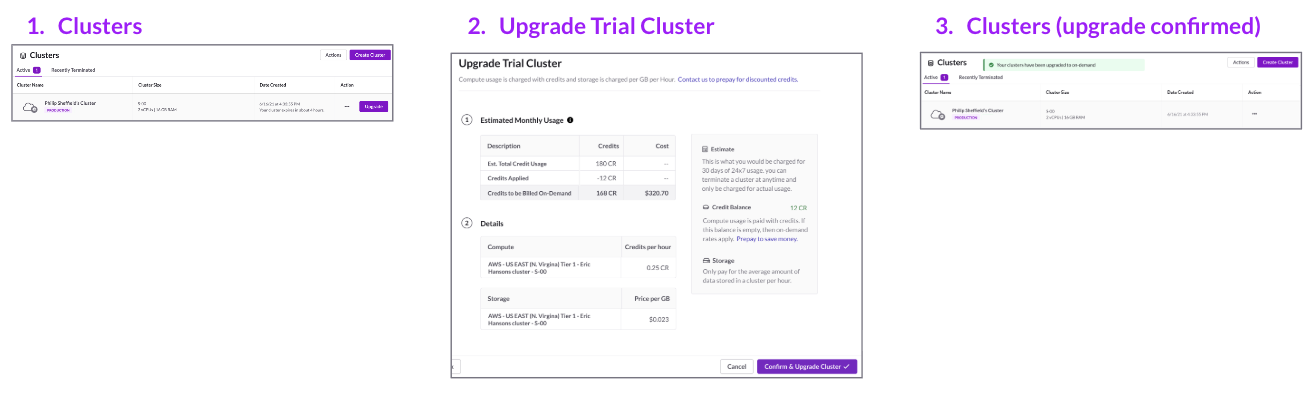

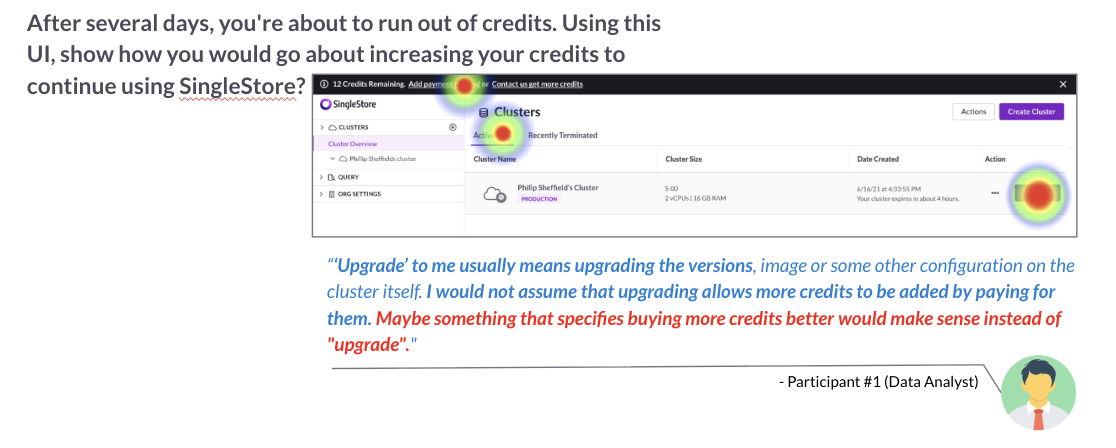

Cluster Credits analyzed how users understand and interact with time-based resource usage and allocation metrics, with emphasis on terminology and UI flow.

Research Methodology

The Cluster Credits study employed a mixed-method usability approach to investigate how users interpret and act on resource usage metrics and related interface flows within the product.

Participants representing a spectrum of technical backgrounds were recruited to complete a series of task-based interactions involving allocation visualization, cost interpretation, and quota management. These tasks were designed to surface comprehension issues, misaligned mental models, and ambiguity around terminology.

Sessions were conducted remotely and moderated, with participants encouraged to use a think-aloud protocol to articulate their expectations, points of confusion, and decision cues throughout each interaction. After task completion, structured follow-ups and open-ended questions allowed us to probe deeper into users’ reasoning, interpretation challenges, and suggestions for terminology or flow improvement.

Quantitative usability metrics collected included:

Task success and error incidence

Time on critical subtasks

Misinterpretation frequency

Confidence ratings for interpreting visuals

We analyzed qualitative feedback using iterative thematic coding to identify recurring issues tied to information architecture, labeling clarity, and visualization interpretation. These insights were synthesized into actionable recommendations that influenced terminology refinement, UI flow adjustments, and prioritization of visual clarity enhancements in the product.